We recently faced the need to let partner companies insert data into our customers’ databases, so we had to design and implement an API that lets these authorized users do just that.

Given that our application is built on top of Symfony, the easiest solution would have been to add a controller to receive the data, validate it, insert it into the designated shards, and finally return the result to the client. Unfortunately, this solution would force the server to execute the task in real time even during traffic peaks, and this would become a problem because the task could require a significant amount of time as it could involve many customers, and the request could time out. It would also force us to integrate another authentication system into the existing application.

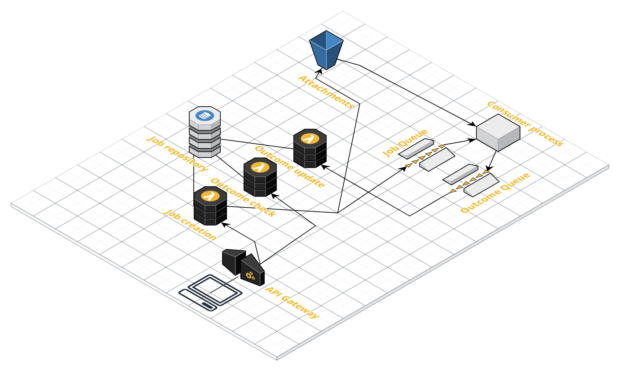

To avoid these problems we chose to decouple the service from the main application using API Gateway. This AWS product lets you create API methods using configuration only, even the authentication is completely managed with Cognito.

To fully decouple the systems we chose to have all the communications between the API and the main application pass through SQS. The messages will be processed by the existing consumer machines so the web servers’ load would not be affected in any way. In a way, we can see this API as a way to accept jobs from authorized partners. Being this its only function, the API will just expose a method to submit a new job and another to check the result by polling. We decided to not implement a webhook system for this first stage, for an easier integration process.

API Gateway is capable of performing input data validation: you only need to create a json schema model and bind it to the method. After validation, the request is forwarded to a lambda function that performs additional and more complex validations including unique constraints related to our domain, and then sends the data to the SQS queue. The system also keeps a list of the jobs with their status in a Dynamo table, with the job ID returned to the client.

The consumer servers receive the messages containing the job input, they execute them and then send another message containing the job result to a response queue. These messages then invoke another lambda function that updates the job status in the Dynamo table. The second API endpoint that allows you to check the job result will just fetch that value from the Dynamo table by using the provided job ID.

In our case, the data can contain file attachments that can easily exceed the SQS message size limit (currently set at 256KB). For this reason, these files are saved on S3 by the lambda function and the message will contain only a reference to them.

The main application will only contain the code to insert the data into the customers databases. We just added the “symfony/amazon-sqs-messenger” component as a dependency, configured the queues and implemented an handler class that receives the already deserialized message, invokes the data insertion routine and sends the results to the message bus.

The overall architecture is represented in the following diagram:

One big upside of this solution is the virtually unlimited scalability granted by the totally serverless approach. It’s also easy to deploy multiple copies of the entire infrastructure in just seconds by using tools like CDK to run tests, provide test environments to the partners or to perform blue green deployments.